In the last few years, we’ve observed more and more KVM customers using software-defined storage solutions, especially Ceph. Frequently, deployments would start small and then grow over time. This is true for both CloudStack and Ceph which is used as Primary Storage for VMs. Over time, we’ve seen more frequent customer requests to change Ceph monitor IP/FQDN in CloudStack. Whether it’s for maintenance reasons, or to grow the Ceph deployment – people would be adding/moving Ceph monitors, moving from IP to FQDN, etc.

Below we’ll describe a procedure for changing the Ceph monitor configuration in a running CloudStack deployment. Due to the native KVM limitations – there are some limitations – and this is being explained at the end of this article.

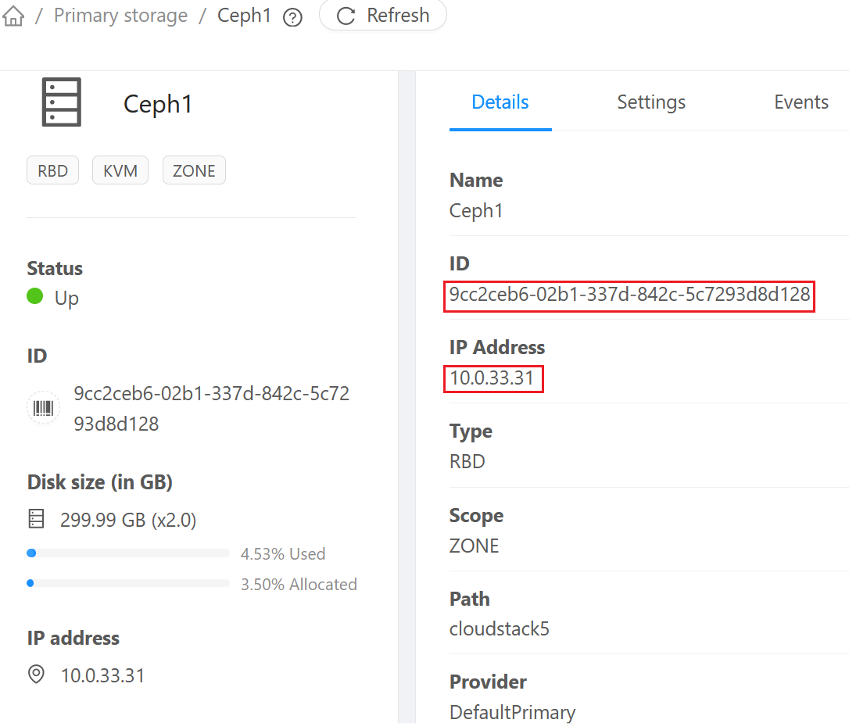

In our example case below, we are already using the Ceph monitor with IP “10.0.33.31” and we have a few VMs running on Ceph. We are going to replace this IP with an FQDN “cephmon.domain.local” (you can use another IP optionally). In case using FQDN, you must ensure proper DNS resolution is in place.

Example current configuration

Let’s observe the current configuration:

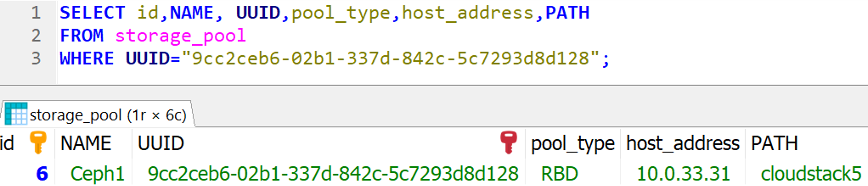

Checking the same info from the CloudStack database – you should see something like this:

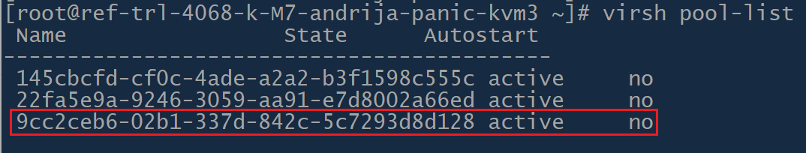

Let’s check the storage “pools” defined in KVM:

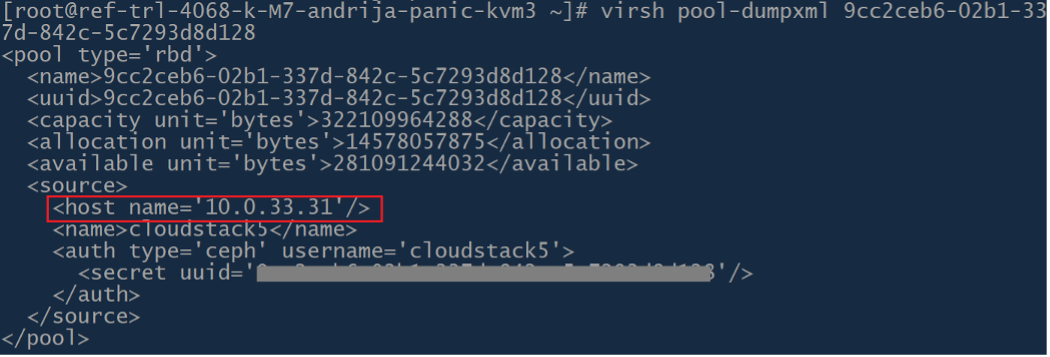

We can see details of our specific pool:

Updating the CloudStack configuration

Now that we have a starting point – let’s see how can we change the monitor IP to a FQDN (or to another IP, for that matter) in CloudStack.

The high-level steps are as follows:

- Update CloudStack DB

- Update storage pool definition/XML on all KVM hosts

- Update the pool secret definition/XML on all KVM hosts

- Restart libvirt daemon on those KVM hosts

The first step would be to change the record in CloudStack database with a simple SQL query – our Ceph pool has ID=6 (UUID “9cc2ceb6-02b1-337d-842c-5c7293d8d128”) so this is what we are updating:

UPDATE storage_pool SET host_address= ‘cephmon.domain.local’ WHERE id=6;

Next thing – we need to edit the storage pool info on each KVM node with virsh – in our example, this is a pool with UUID “9cc2ceb6-02b1-337d-842c-5c7293d8d128”

virsh pool-edit 9cc2ceb6-02b1-337d-842c-5c7293d8d128

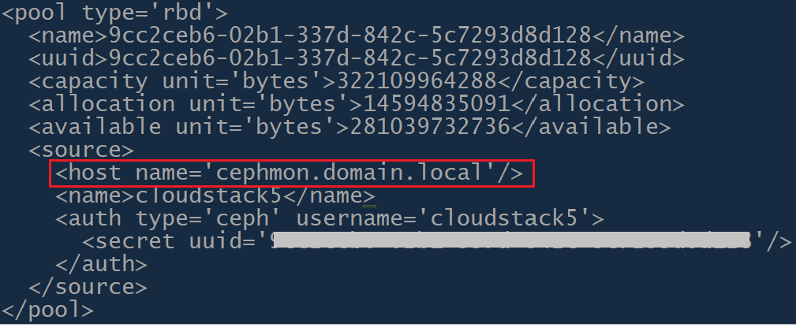

Here – we will define the new FQDN (or IP) – in our example, the pool XML should look like this:

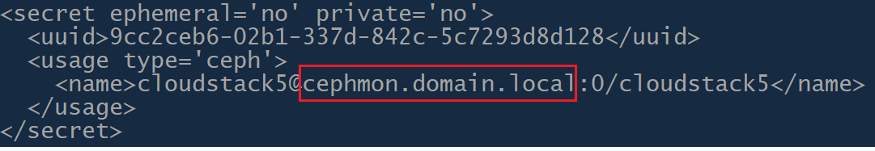

Next thing – we need to update the pool’s secret file. The corresponding secret files are always named by the pool name, so in our case, we will edit the ‘/etc/libvirt/secrets/9cc2ceb6-02b1-337d-842c-5c7293d8d128.xml’ file. Insert new Ceph monitor FQDN or IP – it must be identical to the one in the pool’s XML file. The resulting file should look like the following:

Now that we have updated Ceph monitor on all required places – we need to restart libvirt daemon, so the changes are picked up.

systemctl restart libvirtd

This will trigger cloudstack-agent reload/restart as well – confirm that the pool (and new Ceph monitor FQDN/IP) is still there after the agent restart (virsh pool-list, virsh pool-dumpxml). If you have not updated the secret file with the identical FQDN/IP as in the pool’s XML file – the pool will be removed from libvirt during the restart and cloudstack agent restart will NOT create the pool properly because the pool is absent while its secret file still exists – so you’ll have to delete the corresponding secret files and restart libvirt again (rm /etc/libvirt/secrets/9cc2ceb6-02b1-337d-842c-5c7293d8d128.*), check the pool is back there in libvirt, and do necessary changes again.

And that’s it – at least for any new VMs that are started from this moment and onwards.

However, as part of the natural KVM limitation, there is no way to update the XML of the running VMs – so they will continue to run connected to the old Ceph monitor. If there is a requirement that the old Ceph monitor is not used any more by any VM, then you’ll have to ensure each existing VM has been fully power-cycled (Stop and Start VM via CloudStack).

Using a single Ceph monitor IP presents a SPOF for a VM. Using FQDN with DNS Round Robin behind it ensures that (only) one of the Ceph monitors is used for each VM – this is again a SPOF for a VM. So, to provide some redundancy around connections to Ceph monitors – a new feature has been introduced in a recent CloudStack release (4.18.0.0) where it is possible to define multiple, comma-separated list of Ceph monitors while adding Ceph as the Primary Storage pool in CloudStack – this would ensure that there is a set of Ceph monitors inside each VM’s XML definition, so if the primary Ceph monitor (to which VM is connected) goes down, then libvirt will connect to another monitor, with no downtime.

About the author

Andrija Panic is a Cloud Architect at ShapeBlue, the Cloud Specialists, and is a committer and PMC member of Apache CloudStack. Andrija spends most of his time designing and implementing IaaS solutions based on Apache CloudStack.

Andrija Panic is a Cloud Architect at ShapeBlue and a PMC member of Apache CloudStack. With almost 20 years in the IT industry and over 12 years of intimate work with CloudStack, Andrija has helped some of the largest, worldwide organizations build their clouds, migrate from commercial forks of CloudStack, and has provided consulting and support to a range of public, private, and government cloud providers across the US, EMEA, and Japan. Away from work, he enjoys spending time with his daughters, riding his bike, and tries to avoid adrenaline-filled activities.

You can learn more about Andrija and his background by reading his Meet The Team blog.