For primary storage, CloudStack supports many managed storage solutions via storage plugins, such as SolidFire, Ceph, Datera, CloudByte and Nexenta. There are other managed storages which CloudStack does not support, one of which is Dell EMC PowerFlex (formerly known as VxFlexOS or ScaleIO). PowerFlex is a distributed shared block storage, like Ceph / RBD storage.

This feature provides a new storage plugin that enables the use of a Dell EMC PowerFlex v3.5 storage pool as a managed Primary Storage for KVM hypervisor, either as a zone-wide or cluster-wide pool. This pool can be added either from the UI or API.

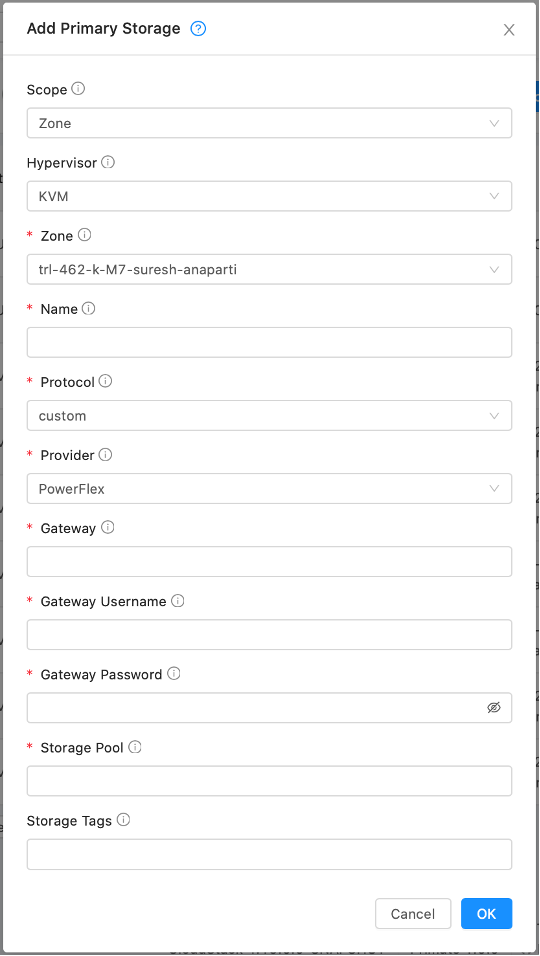

Adding a PowerFlex storage pool

To add a pool via the CloudStack UI, Navigate to “Infrastructure -> Primary Storage -> Add Primary Storage” and specify the following:

- Scope: Zone-Wide (For Cluster-Wide – Specify Pod & Cluster)

- Hypervisor: KVM

- Zone: Select a zone from the list where to add

- Name: Specify custom name for the storage pool

- Provider: PowerFlex

- Gateway: Specify PowerFlex gateway

- Gateway Username: Specify PowerFlex gateway username

- Gateway Password: Specify PowerFlex gateway password

- Storage Pool: Specify PowerFlex storage pool name

- Storage Tags: Add a storage tag for the pool, to use in the compute/disk offering

To add from the API, use createStoragePool API and specify the storage pool name, scope, zone, (cluster & pod for cluster-wide), hypervisor as KVM, provider as PowerFlex with the url in the pre-defined format below:

PowerFlex storage pool URL format:

powerflex://<API_USER>:<API_PASSWORD>@<GATEWAY>/<STORAGEPOOL>

where,

<API_USER> : user name for API access

<API_PASSWORD> : url-encoded password for API access

<GATEWAY> : gateway host

<STORAGEPOOL> : storage pool name (case sensitive)

For example, the following cmk command would add PowerFlex storage pool as a zone-wide primary storage with a storage tag ‘powerflex’:

create storagepool name=mypowerflexpool scope=zone hypervisor=KVM provider= PowerFlex tags=powerflex url=powerflex://admin:P%40ssword123@10.2.3.137/cspool zoneid=ceee0b39-3984-4108-bd07-3ccffac961a9

Service and Disk Offerings for PowerFlex

You can create service and disk offerings for a PowerFlex pool in the usual way from both the UI and API using a unique storage tag. Use these offerings to deploy VMs and create data disks on PowerFlex pool.

If QoS parameters, bandwidth limit and IOPs limit need to be specified for a service or disk offering, the details parameter keys bandwidthLimitInMbps & iopsLimit need to be passed to the API. For example, the following API commands (using cmk) creates a service offering and disk offering with storage tag ‘powerflex’ and QoS parameters:

create serviceoffering name=pflex_instance displaytext=pflex_instance storagetype=shared provisioningtype=thin cpunumber=1 cpuspeed=1000 memory=1024 tags=powerflex serviceofferingdetails[0].bandwidthLimitInMbps=90 serviceofferingdetails[0].iopsLimit=9000

create diskoffering name=pflex_disk displaytext=pflex_disk storagetype=shared provisioningtype=thick disksize=3 tags=powerflex details[0].bandwidthLimitInMbps=70 details[0].iopsLimit=7000

When explicit QoS parameters are not passed, they are defaulted to 0 which means unlimited.

VM and Volume operations

The lifecycle operations of CloudStack resources, templates, volumes, and snapshots in a PowerFlex storage pool can managed through the new plugin. The following operations are supported for a PowerFlex pool:

- VM lifecycle and operations:

- Deploy system VMs from the systemvm template

- Deploy user VM(s) using the selected template in QCOW2 & RAW formats, and from an ISO image

- Start, Stop, Restart, Reinstall, Destroy VM(s)

- VM snapshot (disk-only, snapshot with memory is not supported)

- Migrate VM from one host to another (within and across clusters, for zone-wide primary storage)

- Volume lifecycle and operations:

- Note: PowerFlex volumes are in RAW format. The disk size is rounded to the nearest 8GB as the PowerFlex uses an 8GB disk boundary.

- Create ROOT disks using the selected template (in QCOW2 & RAW formats, seeding from NFS secondary storage and direct download templates)

- List, Detach, Resize ROOT volumes

- Create, List, Attach, Detach, Resize, Delete DATA volumes

- Create, List, Revert, Delete snapshots of volumes (with backup in Primary, no backup to secondary storage)

- Create template (on secondary storage in QCOW2 format) from PowerFlex volume or snapshot

- Support PowerFlex volume QoS using details parameter keys: bandwidthLimitInMbps, iopsLimit in service/disk offering. These are the SDC (ScaleIO Data Client) limits for the volume.

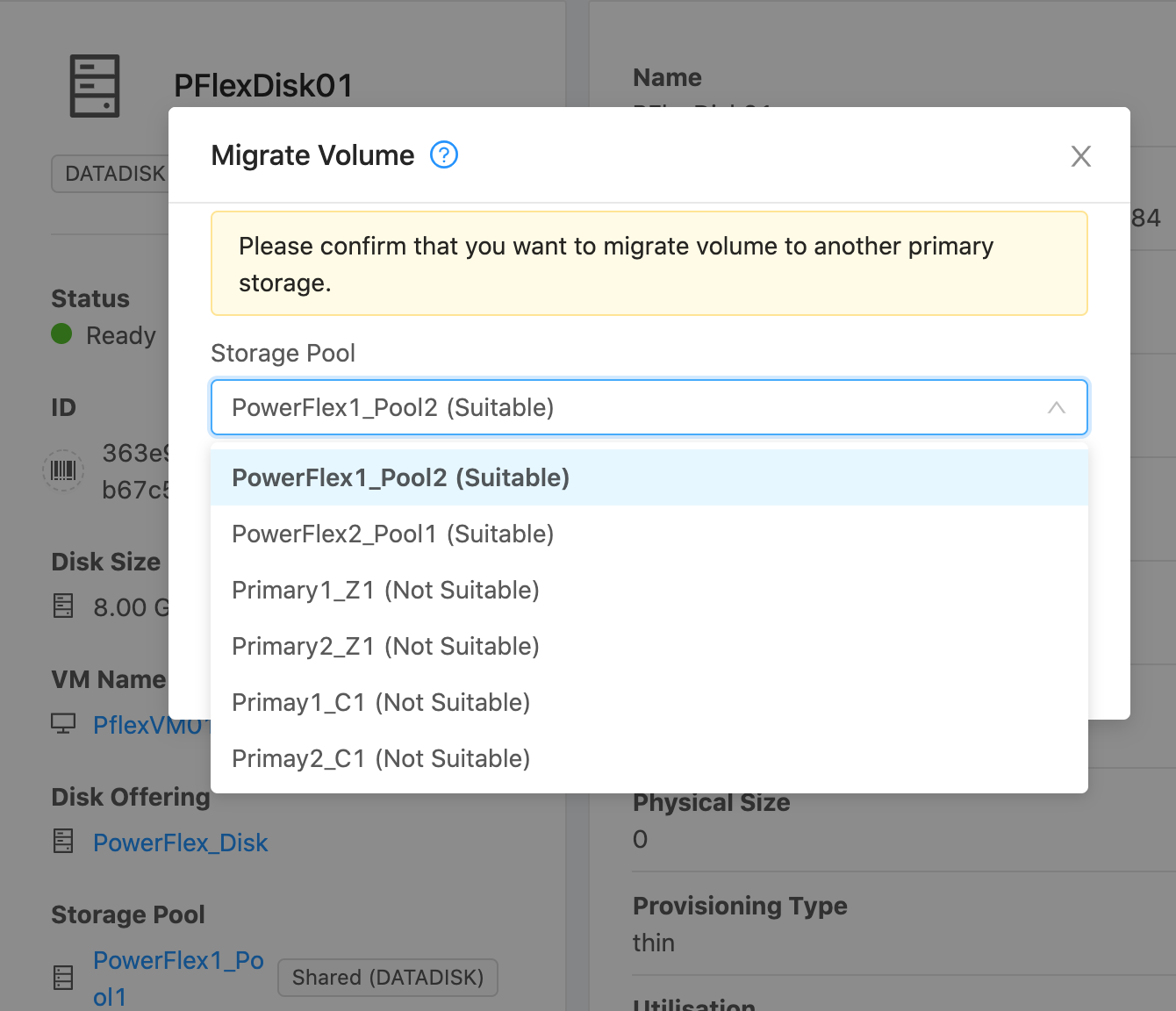

- Migrate volume from one PowerFlex storage pool to another. Supports both encrypted or non-encrypted volumes which are attached to either stopped or running VM.

-

- Supports migration within the same PowerFlex storage cluster, along with snapshots, using the native V-Tree (Volume Tree) migration operation.

- Supports migration across different PowerFlex storage clusters, without snapshots, through block copy (using qemu-img convert) of the disks, by mapping the source and target disks (with RAW format) in the same host, which acts as an SDC.

-

- Config drive on scratch/cache space on KVM host, using the path specified in the agent.properties file on the KVM host.

New Settings

Some new settings are introduced for effective management of the operations on a PowerFlex storage pool:

| Configuration | Description | Default |

| storage.pool.disk.wait | New primary storage level configuration to set the custom wait time for PowerFlex disk availability in the host (currently supports PowerFlex only). | 60 secs |

| storage.pool.client.timeout | New primary storage level configuration to set the PowerFlex REST API client connection timeout (currently supports PowerFlex only). | 60 secs |

| storage.pool.client.max.connections | New primary storage level configuration to set the PowerFlex REST API client max connections (currently supports PowerFlex only). | 100 |

| custom.cs.identifier | New global configuration, which holds 4 characters (initially randomly generated). This parameter can be updated to suit the requirement of unique CloudStack installation identifier that helps in tracking the volumes of a specific cloudstack installation in the PowerFlex storage pool, used in Sharing basis. No restriction in min/max characters, but the max length is subject to volume naming restriction in PowerFlex. | random 4 character string |

In addition, the following are added / updated to facilitate config drive caching on the host, and router health checks, when the volumes of underlying VMs and Routers are on the PowerFlex pool.

| Configuration | Description | Default |

| vm.configdrive.primarypool.enabled | The scope changed from Global to Zone level, which helps in enabling this per . | false |

| vm.configdrive.use.host.cache.on.unsupported.pool | New zone level configuration to use host cache for config drives when storage pool doesn’t support config drive. | true |

| vm.configdrive.force.host.cache.use | New zone level configuration to force host cache for config drives. | false |

| router.health.checks.failures.to.recreate.vr | New test “filesystem.writable.test” added, which checks the router filesystem is writable or not. If set to “filesystem.writable.test”, the router is recreated when the disk is read-. | <empty> |

Agent Parameters

The agent on the KVM host uses a cache location for storing the config drives. It also uses some commands of the PowerFlex client (SDC) to sync the volumes mapped. The following parameters are introduced in the agent.properties file to specify custom cache path and SDC installation path (if other than the default path):

| Parameter | Description | Default |

| host.cache.location | new parameter to specify the host cache path. Config drives will be created on the “/config” directory on the host cache. | /var/cache/cloud |

| powerflex.sdc.home.dir | new parameter to specify SDC home path if installed in custom directory, required to rescan and query_vols in the SDC. | /opt/emc/scaleio/sdc |

Implementation details

This new storage plugin is implemented using the storage subsystem framework in the CloudStack architecture. A new storage provider “PowerFlex” is introduced with the associated subsystem classes (Driver, Lifecycle, Adaptor, Pool), which are responsible for handling all the operations supported for Dell EMC PowerFlex / ScaleIO storage pools.

A ScaleIO gateway client is added to communicate with the PowerFlex / ScaleIO gateway server using RESTful APIs for various operations and to query the pool stats. It facilitates the following functionality:

- Secure authentication with provided URL and credentials

- Auto renewal of the client (after session expiry and on ‘401 Unauthorized’ response) without management server restart

- List all storage pools, find storage pool by ID / name

- List all SDCs and find a SDC by IP address

- Map / Unmap volume(s) to SDC (a KVM host)

- Other volume lifecycle operations supported in ScaleIO

All storage related operations (eg. attach volume, detach volume, copy volume, delete volume, migrate volume, etc.) are handled by various Command handlers and the KVM storage processor as orchestrated by the KVM server resource class (LibvirtComputingResource).

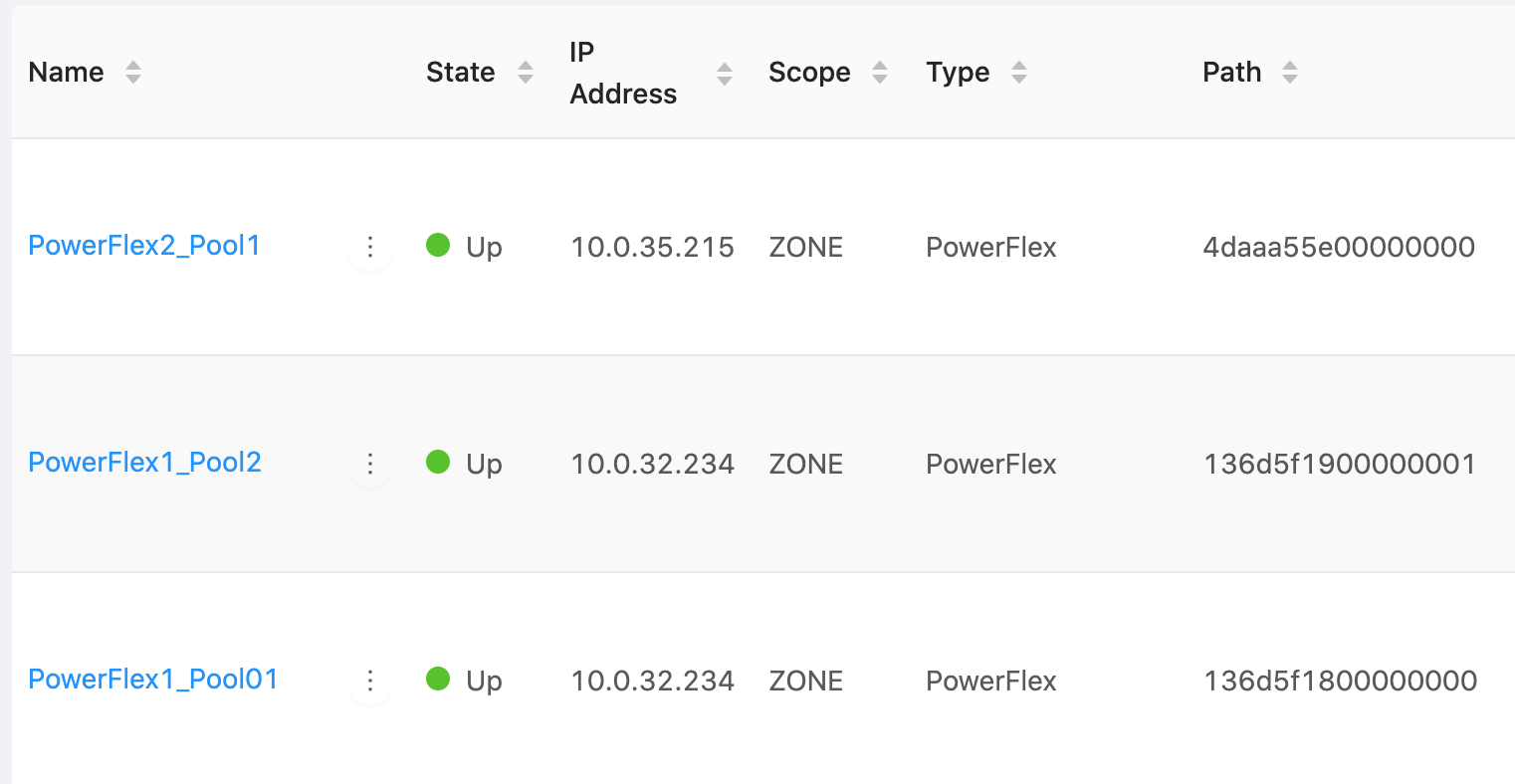

For volume migration, PowerFlex storage pools suitable for migration are marked as such (when the underlying storage allows it). These pools are listed when searching for suitable PowerFlex pools for migration as shown below.

The cache storage directory path on the KVM host is picked from the parameter “host.cache.location” in agent.properties file. This path will be used to host config drive ISOs.

Naming conventions used for PowerFlex volumes

The following naming conventions are used for CloudStack resources in a PowerFlex storage pool, which avoids naming conflicts when the same PowerFlex pool is shared across multiple CloudStack zones / installations:

- Volume: vol-[vol-id]-[pool-key]-[custom.cs.identifier]

- Template: tmpl-[tmpl-id]-[pool-key]-[custom.cs.identifier]

- Snapshot: snap-[snap-id]-[pool-key]-[custom.cs.identifier]

- VMSnapshot: vmsnap-[vmsnap-id]-[vol-id]-[pool-key]-[custom.cs.identifier]

Where…

[pool-key] = 4 characters picked from the pool uuid. Example UUID: fd5227cb-5538-4fef-8427-4aa97786ccbc => fd52(27cb)-5538-4fef-8427-4aa97786ccbc. The highlighted 4 characters (in yellow) are picked. The pool can tracked with the UUID containing [pool-key].

[custom.cs.identifier] = value of the global configuration “custom.cs.identifier”, which holds 4 characters randomly generated initially. This parameter can be updated to suit the requirement of unique CloudStack installation identifier, which helps in tracking the volumes of a specific CloudStack installation.

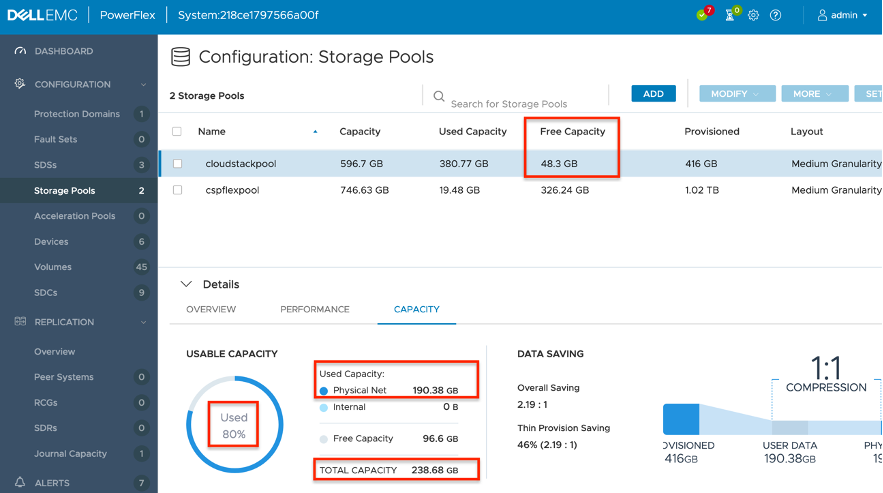

PowerFlex Capacity in CloudStack

The PowerFlex capacity considered in CloudStack for various capacity related checks matches with the capacity stats marked with red boxes in the below image.

The Provisioned size (“sizeInKb” property) and Allocated size (“netProvisionedAddressesInKb” property) of the PowerFlex volume from Query Volume object API response, are considered as the virtual size (total capacity) and used size of the volume in CloudStack respectively.

Other Considerations

The Provisioned size (“sizeInKb” property) and Allocated size (“netProvisionedAddressesInKb” property) of the PowerFlex volume from Query Volume object API response, are considered as the virtual size (total capacity) and used size of the volume in CloudStack respectively.

- CloudStack will not manage the creation of storage pool/domains etc. in ScaleIO. This must be done by the Admin prior to creating a storage pool in CloudStack. Similarly, deletion of ScaleIO storage pool in CloudStack will not cause actual deletion or removal of storage pool on ScaleIO side.

- ScaleIO SDC is installed in the KVM host(s), service running & connected to the ScaleIO Metadata Manager (MDM).

- The seeded ScaleIO template volume(s) [in RAW format] converted from the direct templates [QCOW2/RAW] on secondary storage have the template’s virtual size as the Allocated size in ScaleIO, irrespective of the “Zero Padding Policy” setting for the pool.

- The ScaleIO ROOT volume(s) [RAW] converted from the seeded templates volume(s) [RAW] have its total capacity (virtual size) as the Allocated size in ScaleIO, irrespective of the “Zero Padding Policy” setting for the pool.

- The ScaleIO DATA volume(s) [RAW] created / attached have the Allocated size as ‘0’ in ScaleIO initially, and changes with the file system / block copy.

This feature will be included in the Q3 2021 LTS release of Apache CloudStack.

More about Dell EMC PowerFlex

- Getting to Know PowerFlex: https://cpsdocs.dellemc.com/bundle/PF_KNOW/page/GUID-D6DFA46A-6085-47CE-88A9-B503EFC6CFD2.html

- PowerFlex product documentation: https://www.dell.com/support/home/en-in/product-support/product/scaleio/docs

- PowerFlex Limitations: https://cpsdocs.dellemc.com/bundle/PF_KNOW/page/GUID-480ABD0F-4DB5-4F07-9EC4-4779CBF5E9A4.html

Suresh Anaparti has over 15 years of end-to-end product development experience in Cloud Infrastructure, Telecom and Geospatial technologies. He is an active Apache CloudStack committer. He has been working on CloudStack development for more than 5 years.

You can learn more about Suresh and his background by reading his Meet The Team blog.