Introduction

In this blog we discuss applications of machine learning (ML) in datacenters and how that might integrate with Apache CloudStack (ACS). We also try to identify various places in the lifecycle of datacentres where such tools can be helpful.

With any datacentre deployment, the primary goal is to achieve efficient resource provisioning whilst also maintaining performance and availability. Datacentres have become complex and multidimensional, both in terms of software and hardware, and we should also consider a hybrid hosting character. Maintaining an optimal deployment with minimal downtime is consequently becoming more challenging with manual operations.

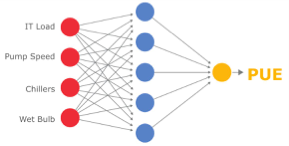

Recent trends show some companies moving towards smarter ways to design, deploy and maintain their infrastructure with a more proactive than reactive approach – i.e. a prevention driven approach instead of break / fix. Some common examples include HPE Insights; Google’s in-house cooling system (see figure 1 below); Facebook using ML for scheduling jobs; Litbit’s development of the first AI-powered, datacentre operator; Dac, etc. Apache CloudStack would be a good candidate for such integration and experimentation, as it provides a clean easy way to deploy and maintain storage network and compute.

Figure 1: Neural network representation of Google’s power cooling system

Artificial Intelligence / Machine learning (AI / ML) can play a crucial role in this regard, and there are different players in the market working towards building a smarter, data driven approach when implementing datacentres. We will discuss AI / ML terminology and techniques as toolkit and then proposes some problems we can solve with ML.

Related terms

AI is an old but emerging field to making machines smart, and it covers a wide range of techniques. ML is a subset of AI that requires developing models to either:

- Classify (i.e. given a set of parameters, we want to assign a class to an entity represented by these parameters)

- Value Prediction (i.e. given a set of parameters, we want to guess the value of a function dependent on these parameters)

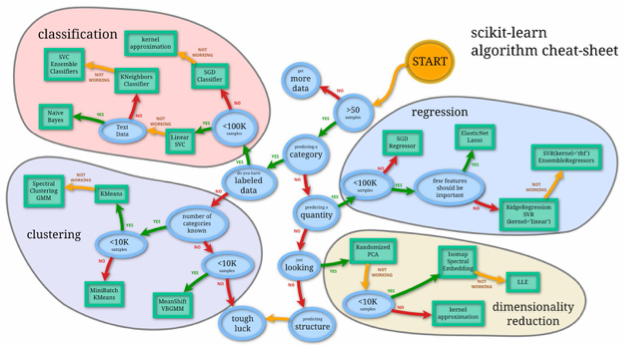

To solve these problems there are several models that can be employed, and some of these are represented by Figure 2.

Figure 2: Cheat sheet to understand what can be done with data.

Given the vast breadth of ML, for the applications we propose later the following tools can be a good starting point:

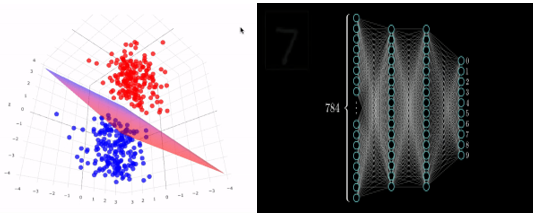

- Support Vector Machine (SVM) – a linear classifier for binary classification. E.g. classifying whether a host is in an error state. Figure 3a shows how a 3d SVM looks like and what kind of spatial distribution favours SVM application.

- Decision Trees – a data driven weighted tree for making decisions. E.g. which host to use for VM migrations in case of failure.

- Logistic Regression – uses data to generate weights for a logistic regression model to predict a value. A sample example could be predicting the host failure probability given a specific set of parameters.

- Neural Networks – is one of the most actively used models for classification technique. Primarily for image/signal processing, it can be used for applications like classifying energy efficiency usage. The drawback with these models is that it needs massive amounts of data for training. The more data is available for training, the higher is the accuracy. Figure 3b shows a variant of neural network (multi-layer perceptron) which shows the large number of weights for a 28×28 grayscale image as parameter. Each edge between nodes is a weight to be trained on and the setup shown has around 13,000 weights.

Figure 3 (a) left shows a simple 3d SVM; (b) right shows a multi-layer perceptron for identification of hand written digits recognition for 28×28 grayscale image as input and 2 hidden layers with 16 nodes

It is worth calling out that there are several open source frameworks that provide use of these models to experiment with (e.g. numpy, scipy, scikit-learn, pytorch, tensorflow, etc). Maximum effort in a typical ML application involves due diligence to identify parameters, collect data, train, and then utilize the trained models during run time. This blog does not go into further details on this and readers should explore further if interested. However, the aim here is to establish that we can use data (pre-collected or collection on the fly) to develop models for classification and value prediction.

Applications in datacentres

Having laid out some basic concepts, we can now discuss some general applications of Machine Learning in data centres and CloudStack.

Energy efficiency this is the most common and successful application of machine learning in datacentre operations. For example, Google has published that with deep learning and using 120 parameters ranging from IT load, weather conditions, number of chillers and cooling towers running, equipment setpoints, etc., they were able to reduce energy consumption by 30% and also reduce the greenhouse gases produced.

Operational optimizations are fairly hard to maintain as it largely depends on the run-time loads, but if implemented successfully it can generate high returns. CPU, disk and network resource utilization, and load balancing inefficiencies in server usage can mean large losses in revenue. Load balancing tools with built-in ML models are being developed and are able to learn from past data to run load distributions more efficiently. With less congestion and overload, we can also avoid several errors triggered and causing downtime.

This application has huge potential for integration into CloudStack, and with built in models admins can provide the choice of models to use for load distribution. The integrated architecture of CloudStack also helps, with easy plugin-like integration of decision modules.

Fault prediction and remediation This supplements operational optimizations. The former refers to resource allocation and restructuring while this application refers to a wide array of fault prediction at both software and hardware level. Products like HPE Insights use data such as temperature fluctuations, movement speeds, read / write times or fluctuations to predict possible faults in the near future for host or disk failure. This can help in proactive remediation without any downtime. CloudStack also serves as a good candidate to develop such tools. In the middle of its monitor tasks we can add additional checks and collect data to train models for such checks.

Within the above broad categories there are some problems that can be addressed in ACS:

- Load balancing techniques – better compute, storage and network utilization. It can also deliver lower downtime. By predicting future heavy workloads and traffic, pre-emptive migrations can also be scheduled.

- VM host / Volumes – placement & migration in case of failures can result in lower downtime.

- Host failure prediction and maintenance module can trigger migrations in case of failures and notify admins for precautionary measures.

- Smarter routers can be developed by injecting AI driven tools into virtual routers.

- Log analysis – logs generated by ACS can be parsed and used for providing recommendations for possible root causes in case of failures.

While applications can be beneficial there needs to be an awareness of at least 2 challenges that come along with ML:

- What / where data comes from. Collection of the right data and its maintenance is a challenge and forms the bedrock of most ML problems. CloudStack’s end to end orchestration provides a unique opportunity to generate such data. Some use cases can also leverage existing logs and usage data while some may need extension of current logging.

- An argument can be made that storing data for training and running additional decision / prediction tasks take up resources. However, in most cases return on the investment can prove to be magnitudes higher.

Conclusion

This blog discusses various possible use cases of Machine Learning in CloudStack and how it can help in datacentre performance.

The applications of machine learning and AI in datacenters is expected to grow due to increasing complexities. CloudStack’s integrated architecture and reach to various resources makes it an ideal candidate to adapt and leverage these tools. Data generation plugins and decision-making modules can be implemented, utilising CloudStack’s reach across the infrastructure and support for different hypervisors and storages.

Blog by: Anurag Awasthi, Software Engineer, ShapeBlue.

Giles is CEO and founder of ShapeBlue and is responsible for overall company strategy, strategic relationships, finance and sales.

He is also a committer and PMC member of the Apache CloudStack project and Chairman of the European Cloudstack User Group, actively helping promote brand awareness of the technology.

Giles can regularly be heard speaking at events around the globe, delivering visionary talks on cloud computing adoption and more specifically on Cloudstack technologies.

Before ShapeBlue, Giles held C-Level technology positions for 15 years including founder and CEO of Octavia Information Systems, a leading UK Managed Service Provider.

Giles holds a BSc in Engineering Physics from Sheffield Hallam University. Outside work, Giles is married with two teenage children. He coaches children’s rugby, is a competitive masters swimmer and can regularly be seen crying when his beloved Tottenham Hotspur lose.