Introduction

KVM hypervisor networking for CloudStack can sometimes be a challenge, considering KVM doesn’t quite have the matured guest networking model found in the likes of VMware vSphere and Citrix XenServer. In this blog post we’re looking at the options for networking KVM hosts using bridges and VLANs, and dive a bit deeper into the configuration for these options. Installation of the hypervisor and CloudStack agent is pretty well covered in the CloudStack installation guide, so we’ll not spend too much time on this.

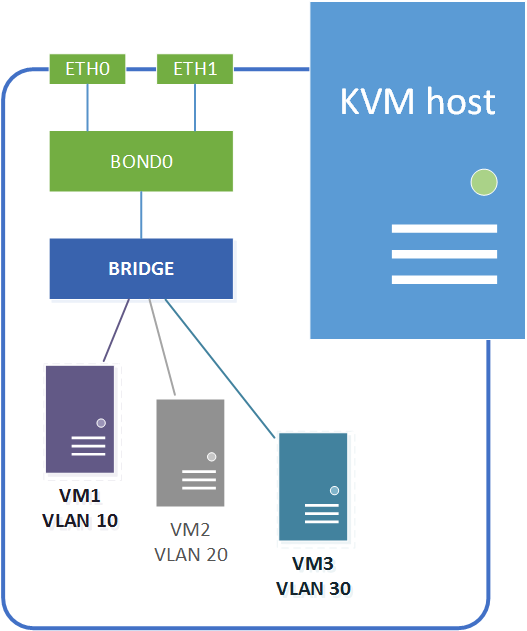

Network bridges

On a linux KVM host guest networking is accomplished using network bridges. These are similar to vSwitches on a VMware ESXi host or networks on a XenServer host (in fact networking on a XenServer host is also accomplished using bridges).

A linux network bridge is a Layer-2 software device which allows traffic to be forwarded between ports internally on the bridge and the physical network uplinks. The traffic flow is controlled by MAC address tables maintained by the bridge itself, which determine which hosts are connected to which bridge port. The bridges allows for traffic segregation using traditional Layer-2 VLANs as well as SDN Layer-3 overlay networks.

Linux bridges vs OpenVswitch

The bridging on a KVM host can be accomplished using traditional linux bridge networking or by adopting an OpenVswitch back end. Traditional linux bridges have been implemented in the linux kernel since version 2.2, and have been maintained through the 2.4 and 2.6 kernels. Linux bridges provide all the basic Layer-2 networking required for a KVM hypervisor back end, but it lacks some automation options and is configured on a per host basis.

OpenVswitch was developed to address this, and provides additional automation in addition to new networking capabilities like Software Defined Networking (SDN). OpenVswitch allows for centralised control and distribution across physical hypervisor hosts, similar to distributed vSwitches in VMware vSphere. Distributed switch control does require additional controller infrastructure like OpenDaylight, Nicira, VMware NSX, etc. – which we won’t cover in this article as it’s not a requirement for CloudStack.

It is also worth noting Citrix started using the OpenVswitch backend in XenServer 6.0.

Network configuration overview

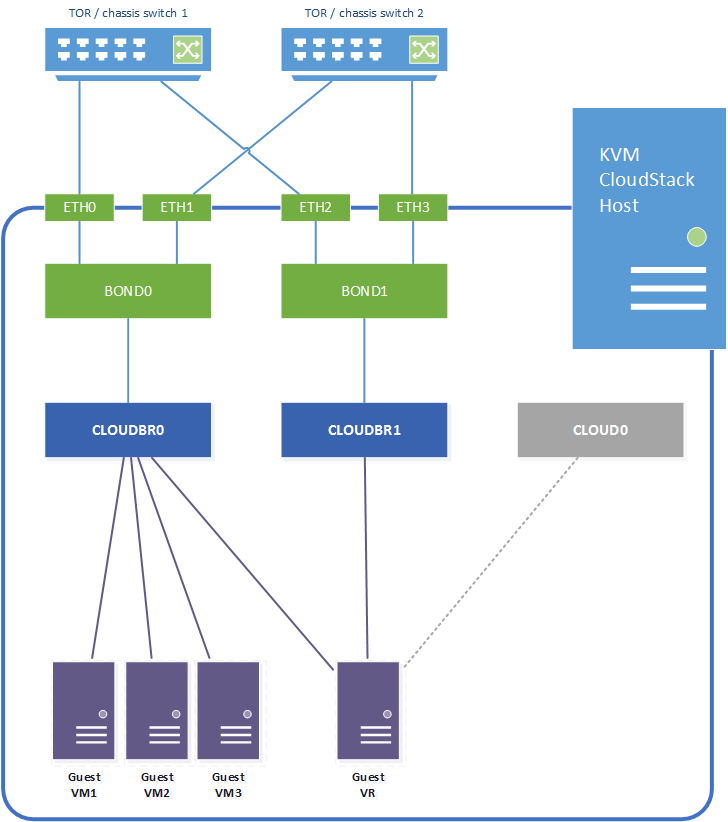

For this example we will configure the following networking model, assuming a linux host with four network interfaces which are bonded for resilience. We also assume all switch ports are trunk ports:

- Network interfaces eth0 + eth1 are bonded as bond0.

- Network interfaces eth1 + eth2 are bonded as bond1.

- Bond0 provides the physical uplink for the bridge “cloudbr0”. This bridge carries the untagged host network interface / IP address, and will also be used for the VLAN tagged guest networks.

- Bond1 provides the physical uplink for the bridge “cloudbr1”. This bridge handles the VLAN untagged public traffic.

The CloudStack zone networks will then be configured as follows:

- Management and guest traffic is configured to use KVM traffic label “cloudbr0”.

- Public traffic is configured to use KVM traffic label “cloudbr1”.

In addition to the above it’s important to remember CloudStack itself require internal connectivity from the hypervisor host to system VMs (Virtual Routers, SSVM and CPVM) over the link local 169.254.0.0/16 subnet. This is done over a host-only bridge “cloud0”, which is created by CloudStack when the host is added to a CloudStack zone.

Linux bridge configuration

CentOS

In CentOS the linux bridge configuration is done with configuration files in /etc/sysconfig/network-scripts.

Each of the four individual NIC interfaces are configured as follows (eth0 / eth1 / eth2 / eth3 are all configured the same way):

# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0 TYPE=Ethernet USERCTL=no MASTER=bond0 SLAVE=yes BOOTPROTO=none HWADDR=00:0C:12:xx:xx:xx NM_CONTROLLED=no ONBOOT=yes

The bond configurations are specificied in the equivalent ifcfg-bond scripts and specify bonding options as well as the upstream bridge name. In this case we’re just setting a basic active-passive bond (mode=1) with status monitoring every 100ms (miimon=100):

# vi /etc/sysconfig/network-scripts/ifcfg-bond0

DEVICE=bond0 ONBOOT=yes BONDING_OPTS='mode=1 miimon=100' BRIDGE=cloudbr0 NM_CONTROLLED=no

The same goes for bond1:

# vi /etc/sysconfig/network-scripts/ifcfg-bond1

DEVICE=bond1 ONBOOT=yes BONDING_OPTS='mode=1 miimon=100' BRIDGE=cloudbr1 NM_CONTROLLED=no

Cloudbr0 is configured in the ifcfg-cloudbr0 script. In addition to the bridge configuration we also specify the host IP address, which is tied directly to the bridge since it is on an untagged VLAN:

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr0

DEVICE=cloudbr0 ONBOOT=yes TYPE=Bridge IPADDR=192.168.100.20 NETMASK=255.255.255.0 GATEWAY=192.168.100.1 NM_CONTROLLED=no DELAY=0

Cloudbr1 does not have an IP address configured hence the the configuration is simpler:

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr1

DEVICE=cloudbr1 ONBOOT=yes TYPE=Bridge NM_CONTROLLED=no DELAY=0

Optional tagged interface for storage traffic

If a dedicated VLAN tagged IP interface is required for e.g. storage traffic this can be accomplished by created a VLAN tagged bond and tying this to a dedicated bridge. In this case we create a new bridge on bond0 using VLAN 100:

# vi /etc/sysconfig/network-scripts/ifcfg-bond.100

DEVICE=bond0.100 VLAN=yes BOOTPROTO=static ONBOOT=yes TYPE=Unknown BRIDGE=cloudbr100

The bridge can now be configured with the desired IP address for storage connectivity:

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr100

DEVICE=cloudbr100 ONBOOT=yes TYPE=Bridge VLAN=yes IPADDR=10.0.100.20 NETMASK=255.255.255.0 NM_CONTROLLED=no DELAY=0

Internal bridge cloud0

When using linux bridge networking there is no requirement to configure the internal “cloud0” bridge, this is all handled by CloudStack.

Network startup

Note – once all network startup scripts are in place and the network service is restarted you may lose connectivity to the host if there are any configuration errors in the files, hence make sure you have console access to rectify any issues.

To make the configuration live restart the network service:

# service network restart

To check the bridges use the brctl command:

# brctl show

bridge name bridge id STP enabled interfaces cloudbr0 8000.000c29b55932 no bond0 cloudbr1 8000.000c29b45956 no bond1

The bonds can be checked with:

# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth0 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth0 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:0c:xx:xx:xx:xx Slave queue ID: 0 Slave Interface: eth1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:0c:xx:xx:xx:xx Slave queue ID: 0

Ubuntu

To use bonding and linux bridge networking in Ubuntu first install the following:

# apt-get install ifenslave-2.6 bridge-utils

Also add the bonding and bridge modules to the kernel modules to be loaded at boot time:

# vi /etc/modules

# /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. loop lp rtc bonding bridge

Before continuing also make sure the correct hostname and FQDN are set in /etc/hostname and /etc/hosts respectively. Also add the following lines to /etc/sysctl.conf:

# vi /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0

All interface, bond and bridge configuration are configured in /etc/network/interfaces. Same as for CentOS we are configuring basic active-passive bonds (mode=1) with status monitoring every 100ms (miimon=100), and configuring bridges on top of these. As before the host IP address is tied to cloudbr0:

# vi /etc/network/interfaces

# The loopback network interface auto lo iface lo inet loopback # The primary network interface auto eth0 iface eth0 inet manual bond-master bond0 auto eth1 iface eth1 inet manual bond-master bond0 auto eth2 iface eth2 inet manual bond-master bond1 auto eth3 iface eth3 inet manual bond-master bond1 auto bond0 iface bond0 inet manual bond-mode active-backup bond-miimon 100 bond-slaves none auto bond1 iface bond1 inet manual bond-mode active-backup bond-miimon 100 bond-slaves none auto cloudbr0 iface cloudbr0 inet static address 192.168.100.20 gateway 192.168.100.1 netmask 255.255.255.0 dns-nameservers 8.8.8.8 8.8.4.4 dns-domain mynet.local bridge_ports bond0 bridge_fd 5 bridge_stp off bridge_maxwait 1 auto cloudbr1 iface cloudbr1 inet manual bridge_ports bond1 bridge_fd 5 bridge_stp off bridge_maxwait 1

Optional tagged interface for storage traffic

Dedicated VLAN tagged IP interface for e.g. storage traffic is again accomplished by creating a VLAN tagged bond and tying this to a dedicated bridge. As above we add the following to /etc/network/interfaces to create a new bridge on bond0 using VLAN 100:

auto bond0.100 iface bond0.100 inet manual vlan-raw-device bond0 auto cloudbr100 iface cloudbr100 inet static address 10.0.100.20 netmask 255.255.255.0 bridge_ports bond0.100 bridge_fd 5 bridge_stp off bridge_maxwait 1

Internal bridge cloud0

When using linux bridge networking the internal “cloud0” bridge is again handled by CloudStack, i.e. there’s no need for specific configuration to be specified for this.

Network startup

Note – once all network startup scripts are in place and the network service is restarted you may lose connectivity to the host if there are any configuration errors in the files, hence make sure you have console access to rectify any issues.

To make the configuration live restart the network service:

# service networking restart

To check the bridges use the brctl command:

# brctl show

bridge name bridge id STP enabled interfaces cloudbr0 8000.000c29b43c4d no bond0 cloudbr1 8000.000c29b43c61 no bond1 cloudbr100 8000.000c29b43c4d no bond0.100

To check the VLANs and bonds:

# cat /proc/net/vlan/config VLAN Dev name | VLAN ID Name-Type: VLAN_NAME_TYPE_RAW_PLUS_VID_NO_PAD bond0.100 | 100 | bond0

# cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth1 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 10 Permanent HW addr: 00:0c:xx:xx:xx:xx Slave queue ID: 0 Slave Interface: eth0 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 10 Permanent HW addr: 00:0c:xx:xx:xx:xx Slave queue ID: 0

OpenVswitch bridge configuration

CentOS

To configure OVS bridges in CentOS we first of all add the bridges using the ovs-vctl command, then add bonds to these:

# ovs-vsctl add-br cloudbr0 # ovs-vsctl add-br cloudbr1 # ovs-vsctl add-bond cloudbr0 bond0 eth0 eth1 # ovs-vsctl add-bond cloudbr1 bond1 eth2 eth3

This will configure the bridges in the OVS database, but the settings will not be persistent. To make the settings persistent we need to configure the network configuration scripts in /etc/sysconfig/network-scripts/, similar to when using linux bridges.

Each individual network interface has a generic configuration – note there is no reference to bonds at this stage. The following ifcfg-eth script applies to all interfaces:

# vi /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0 ONBOOT=yes NM_CONTROLLED=no BOOTPROTO=none HWADDR=00:0C:xx:xx:xx:xx HOTPLUG=no

The bonds reference the interfaces as well as the upstream bridge. In addition the bond configuration specifies the OVS specific settings for the bond (active-backup, no LACP, 100ms status monitoring):

# vi /etc/sysconfig/network-scripts/ifcfg-bond0

DEVICE=bond0 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBond OVS_BRIDGE=cloudbr0 BOOTPROTO=none BOND_IFACES="eth0 eth1" OVS_OPTIONS="bond_mode=active-backup lacp=off other_config:bond-detect-mode=miimon other_config:bond-miimon-interval=100" HOTPLUG=no

# vi /etc/sysconfig/network-scripts/ifcfg-bond1

DEVICE=bond1 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBond OVS_BRIDGE=cloudbr1 BOOTPROTO=none BOND_IFACES="eth2 eth3" OVS_OPTIONS="bond_mode=active-backup lacp=off other_config:bond-detect-mode=miimon other_config:bond-miimon-interval=100" HOTPLUG=no

The bridges are now configured as follows. The host IP address is specified on the untagged cloudbr0 bridge:

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr0

DEVICE=cloudbr0 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBridge BOOTPROTO=static IPADDR=192.168.100.20 NETMASK=255.255.255.0 HOTPLUG=no

Cloudbr1 is configured without an IP address:

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr1

DEVICE=cloudbr1 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBridge BOOTPROTO=none HOTPLUG=no

Internal bridge cloud0

In addition to the above we also need to configure the internal only cloud0 bridge. This is required when using OVS bridging only, i.e. when the network bridge kernel module has been disabled. If the module is enabled CloudStack will configure the internal bridge using linux bridge, whilst allowing all other bridges to be configured using OVS. Note the CloudStack agent will create this bridge, hence there is no need to configure it using the ovs-vsctl command.

Since there is no routing involved for the internal bridge we simply configure this with IP address 169.254.0.1/16:

# vi /etc/sysconfig/network-scripts/ifcfg-cloud0

DEVICE=cloud0 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBridge BOOTPROTO=static HOTPLUG=no IPADDR=169.254.0.1 NETMASK=255.255.0.0

Optional tagged interface for storage traffic

If a dedicated VLAN tagged IP interface is required for e.g. storage traffic this is accomplished by creating a VLAN tagged fake bridge on top of one of the cloud bridges. In this case we add it to cloudbr0 with VLAN 100:

# ovs-vsctl add-br cloudbr100 cloudbr0 100

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr100

DEVICE=cloudbr100 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBridge BOOTPROTO=static IPADDR=10.0.100.20 NETMASK=255.255.255.0 HOTPLUG=no

Additional OVS network settings

To finish off the OVS network configuration specify the hostname, gateway and IPv6 settings:

vim /etc/sysconfig/network

NETWORKING=yes HOSTNAME=kvmhost1.mylab.local GATEWAY=192.168.100.1 NETWORKING_IPV6=no IPV6INIT=no IPV6_AUTOCONF=no

VLAN problems when using OVS

Due to bugs in legacy network interface drivers there are in certain circumstances issues with getting VLAN traffic to propagate between KVM hosts. This is a known issue, and the OpenVswitch VLAN FAQ is a useful place to start any troubleshooting.

One workaround for this issue is to configure the “VLAN splinters” setting on the network interfaces. This is accomplished with the following command:

ovs-vsctl set interface eth0 other-config:enable-vlan-splinters=true

The problem arises when trying to make this setting persistent across reboots, as the command can not be ran as part of the normal ifcfg-eth script.

One way to make this persistent is to add the following lines to the end of the ifup-ovs script:

# vi /etc/sysconfig/network-scripts/ifup-ovs

if [ -x /sbin/ifup-localovs ]; then /sbin/ifup-localovs fi

Then add the commands to a new file ifup-localovs:

# vi /sbin/ifup-localovs

#!/bin/sh ovs-vsctl set interface eth0 other-config:enable-vlan-splinters=true ovs-vsctl set interface eth1 other-config:enable-vlan-splinters=true ovs-vsctl set interface eth2 other-config:enable-vlan-splinters=true ovs-vsctl set interface eth3 other-config:enable-vlan-splinters=true

This will ensure the settings are applied to each network interface at every reboot and after each network service restart.

Network startup

Note – as mentioned for linux bridge networking – once all network startup scripts are in place and the network service is restarted you may lose connectivity to the host if there are any configuration errors in the files, hence make sure you have console access to rectify any issues.

To make the configuration live restart the network service:

# service network restart

To check the bridges use the ovs-vsctl command. The following shows the optional cloudbr100 on VLAN 100:

# ovs-vsctl show

27daed4e-52f3-4177-9827-550f0e7df452 Bridge "cloudbr1" Port "vnet2" Interface "vnet2" Port "bond1" Interface "eth3" Interface "eth2" Port "cloudbr1" Interface "cloudbr1" type: internal Bridge "cloud0" Port "cloud0" Interface "cloud0" type: internal Port "vnet0" Interface "vnet0" Bridge "cloudbr0" Port "cloudbr0" Interface "cloudbr0" type: internal Port "vnet3" Interface "vnet3" Port "vnet1" Interface "vnet1" Port "cloudbr100" tag: 100 Interface "cloudbr100" type: internal Port "bond0" Interface "eth1" Interface "eth0" ovs_version: "2.3.1"

The bond status can be checked with the ovs-appctl command:

ovs-appctl bond/show bond0 ---- bond0 ---- bond_mode: active-backup bond may use recirculation: no, Recirc-ID : -1 bond-hash-basis: 0 updelay: 0 ms downdelay: 0 ms lacp_status: off active slave mac: 00:0c:xx:xx:xx:xx(eth0) slave eth0: enabled active slave may_enable: true slave eth1: enabled may_enable: true

Ubuntu

First of all install the bonding kernel module and make sure this is added to /etc/modules such that it is loaded on startup:

# apt-get install ifenslave-2.6

# vi /etc/modules

# /etc/modules: kernel modules to load at boot time. # # This file contains the names of kernel modules that should be loaded # at boot time, one per line. Lines beginning with "#" are ignored. loop lp rtc bonding

To ensure the linux bridge module isn’t loaded at boot time blacklist this module in /etc/modprobe.d/blacklist.conf:

# vim /etc/modprobe.d/blacklist.conf

Add the following line to the end of the file:

blacklist bridge

Ensure the correct hostname and FQDN are set in /etc/hostname and /etc/hosts respectively, and add the following lines to /etc/sysctl.conf:

# vi /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0

Same as for CentOS we first of all add the OVS bridges and bonds from command line using the ovs-vsctl command line tools:

# ovs-vsctl add-br cloudbr0 # ovs-vsctl add-br cloudbr1 # ovs-vsctl add-bond cloudbr0 bond0 eth0 eth1 bond_mode=active-backup other_config:bond-detect-mode=miimon other_config:bond-miimon-interval=100 # ovs-vsctl add-bond cloudbr1 bond1 eth2 eth3 bond_mode=active-backup other_config:bond-detect-mode=miimon other_config:bond-miimon-interval=100

As for linux bridge all network configuration is applied in /etc/network/interfaces:

# vi /etc/network/interfaces

Note in the following example all individual network interface configuration as commented out – which works in Ubuntu 14.04. For Ubuntu 12.04 the individual interface sections are required.

# The loopback network interface auto lo iface lo inet loopback # The primary network interface #auto eth0 #iface eth0 inet manual #auto eth1 #iface eth1 inet manual #auto eth2 #iface eth2 inet manual #auto eth3 #iface eth3 inet manual allow-cloudbr0 bond0 iface bond0 inet manual ovs_bridge cloudbr0 ovs_type OVSBond ovs_bonds eth0 eth1 ovs_options bond_mode=active-backup other_config:miimon=100 auto cloudbr0 allow-ovs cloudbr0 iface cloudbr0 inet static address 172.16.152.39 netmask 255.255.255.0 gateway 172.16.152.2 dns-nameservers 8.8.8.8 dns-search mbplab.local ovs_type OVSBridge ovs_ports bond0 allow-cloudbr1 bond1 iface bond1 inet manual ovs_bridge cloudbr1 ovs_type OVSBond ovs_bonds eth2 eth3 ovs_options bond_mode=active-backup other_config:miimon=100 allow-ovs cloudbr1 iface cloudbr1 inet manual ovs_type OVSBridge ovs_ports bond1

Bridge startup in Ubuntu 12.04

The OpenVswitch implementation in Ubuntu 12.04 is slightly lacking compared to 14.04 and later. One thing which is missing is startup scripts for bringing the OVS bridges online. This can be accomplished by adding a custom bridge startup script similar to the following:

# openvswitch-bridgestartup

#

# Starts OVS bridges at startup

#

### BEGIN INIT INFO

# Provides: openvswitch-bridgestartup

# Required-Start: openvswitch-switch

# Required-Stop:

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: Brings OVS bridges online at boot time

### END INIT INFO

IFCFG="/etc/network/interfaces"

start() {

BRIDGES=$(ovs-vsctl list-br)

for BRIDGE in $BRIDGES; do

if grep -q -w "$BRIDGE" $IFCFG; then

ifup "$BRIDGE"

fi

done

}

case $1 in

start)

start

;;

stop)

;;

*)

echo "Usage: $0 {start}"

exit 2

;;

esac

Configure the service as follows:

# update-rc.d openvswitch-bridges defaults 21

Internal bridge cloud0

In Ubuntu there is no requirement to add additional configuration for the internal cloud0 bridge, CloudStack manages this.

Optional tagged interface for storage traffic

Additional VLAN tagged interfaces are again accomplished by creating a VLAN tagged fake bridge on top of one of the cloud bridges. In this case we add it to cloudbr0 with VLAN 100:

# ovs-vsctl add-br cloudbr100 cloudbr0 100

# vi /etc/sysconfig/network-scripts/ifcfg-cloudbr100

Conclusion

As KVM is becoming more stable and mature, more people are going to start looking at using it rather that the more traditional XenServer or vSphere solutions, and we hope this article will assist in configuring host networking. As always we’re happy to receive feedback , so please get in touch with any comments, questions or suggestions.

Test Drive Apache CloudStack

Experience first-hand how Apache CloudStack can streamline cloud operations and support the creation of customizable, multi-tenant infrastructure as a service (IaaS) environments.

Giles is CEO and founder of ShapeBlue and is responsible for overall company strategy, strategic relationships, finance and sales.

He is also a committer and PMC member of the Apache CloudStack project and Chairman of the European Cloudstack User Group, actively helping promote brand awareness of the technology.

Giles can regularly be heard speaking at events around the globe, delivering visionary talks on cloud computing adoption and more specifically on Cloudstack technologies.

Before ShapeBlue, Giles held C-Level technology positions for 15 years including founder and CEO of Octavia Information Systems, a leading UK Managed Service Provider.

Giles holds a BSc in Engineering Physics from Sheffield Hallam University. Outside work, Giles is married with two teenage children. He coaches children’s rugby, is a competitive masters swimmer and can regularly be seen crying when his beloved Tottenham Hotspur lose.